In the field of robotics and automation, precise movement and object detection are essential for machines to interact with their environment effectively. One such example is Boston Dynamics’ anthropomorphic robot Atlas, which is designed to exercise, perform physical tasks, and sort boxes with remarkable agility. However, for robots like Atlas to operate seamlessly in dynamic environments, they require accurate systems to detect and interact with objects around them. One tool that plays a pivotal role in this task is fiducial markers.

Understanding Fiducial Markers

Fiducial markers are essentially high-contrast codes, typically in the form of square black-and-white patterns, that can be used by machines to recognize their surroundings and determine the exact location of objects. While they may resemble QR codes, fiducial markers have a distinct advantage in that they can be detected from significantly greater distances. These markers act as reference points, enabling robots to position themselves, identify objects, and execute tasks with precision.

In a logistics context, a camera mounted on the roof of a robot or system can scan these markers to determine the location of packages in a warehouse or distribution center. By automating the detection of packages, the system can drastically improve efficiency, reduce human error, and optimize space and time. However, one significant challenge that has persisted with fiducial marker systems involves the lighting conditions under which they operate. The classic machine vision techniques that are used to decode fiducial markers often struggle in low-light environments or when dealing with shadows.

The Lighting Dilemma

The difficulty arises because many traditional machine vision systems rely heavily on consistent lighting to identify markers. In dim or poorly lit areas, these systems can fail to detect or accurately decode markers, which compromises the performance of the robots and automation systems that rely on them. This issue has long been a bottleneck for many industrial and logistics applications, where operations often occur in environments with fluctuating lighting conditions.

A Breakthrough Solution: Neural Networks for Low-Light Detection

To address this problem, a team of researchers from the University of Córdoba, consisting of Rafael Berral, Rafael Muñoz, Rafael Medina, and Manuel J. Marín, have developed a neural network-based system capable of detecting and decoding fiducial markers in difficult lighting situations. Their breakthrough, published in the journal Image and Vision Computing, offers a complete solution to the longstanding challenge of low-light detection in machine vision systems.

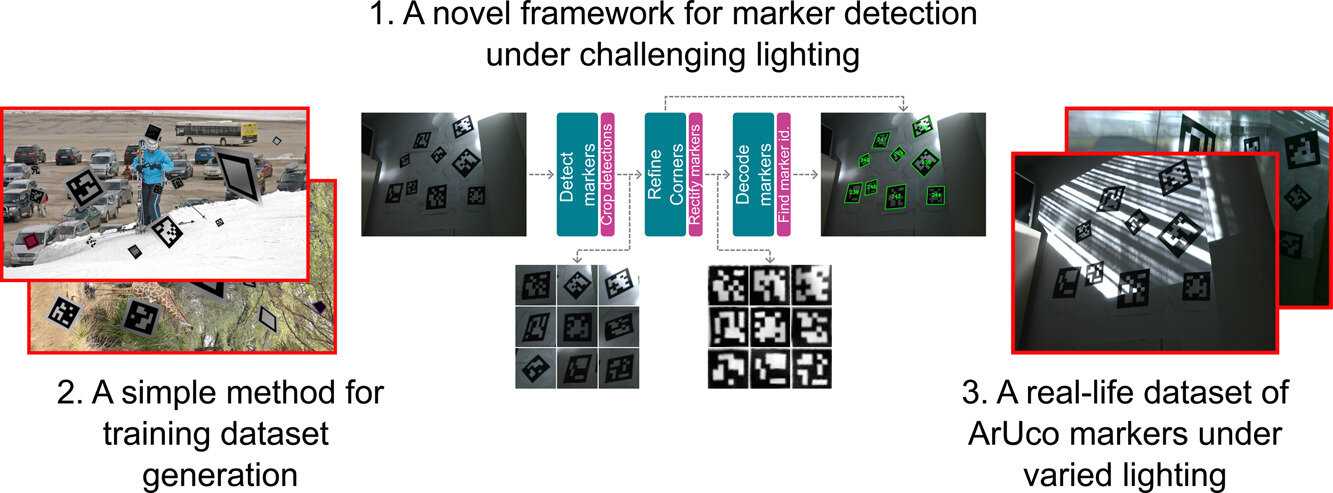

“The use of neural networks in the model allows us to detect this type of marker in a more flexible way, solving the problem of lighting for all phases of the detection and decoding process,” explained Berral. The key innovation in this system lies in its ability to handle the entire process of fiducial marker detection, refinement, and decoding through three distinct neural networks, each designed to tackle a different stage of the operation.

The Process of Marker Detection and Decoding

The system proposed by the University of Córdoba researchers breaks down the fiducial marker recognition process into three main phases:

- Marker Detection: The first step is detecting the marker within the image. The system scans the image for possible locations where the marker may appear, and this is accomplished by a neural network trained to spot the marker, even under poor lighting conditions.

- Corner Refinement: Once the marker is identified, the next step involves refining the corners of the marker. This ensures that the system can pinpoint the exact edges of the marker with high precision. Refining the corners is especially important in low-light scenarios where shadows or poor contrast can obscure the boundaries of the marker.

- Marker Decoding: The final step involves decoding the marker’s information. In this phase, another neural network interprets the marker’s encoded data, which is essential for the machine to know what the marker represents, such as a package ID or a location code.

Each of these steps is supported by a different neural network, creating an end-to-end solution that makes fiducial markers much more reliable, regardless of environmental conditions.

Training the Model: Creating Real-World and Synthetic Data

To build a robust machine vision model capable of working in unfavorable lighting conditions, the researchers created a synthetic dataset that accurately mimics the types of lighting circumstances a robot might face in real-world scenarios. This dataset was designed to simulate not only dim lighting but also situations involving shadows, glare, and other lighting inconsistencies that could interfere with marker recognition.

Once the model was trained using this synthetic data, the team tested it with real-world data. Some of the real-world images were captured by the researchers themselves, while others were taken from previous studies on fiducial marker detection. The combination of synthetic and real-world data ensured that the model was well-equipped to handle the variety of challenges that may arise in practical applications.

The Open-Source Model

A major advantage of this new system is that the researchers have made both the training data and the code open-source. This means that developers, researchers, and companies can now easily test and implement the model in their own machine vision applications. The availability of these resources ensures that the technology can be widely adopted, tested, and further refined across various industries.

Rafael Muñoz emphasized the accessibility of the system, stating, “The model has been tested with real-world data, and the code has been made publicly available. This means that anyone can now test the system with any image in which fiducial markers appear.”

Applications and Impact

The potential impact of this research extends far beyond robots like Atlas. With the ability to detect and decode fiducial markers in low-light and challenging environments, the technology can be used in a wide range of applications. In logistics, for instance, it can help automate package sorting and location identification in warehouses with varying lighting conditions. In robotics, this technology can improve the navigation and object manipulation capabilities of robots working in industrial settings, warehouses, or even outdoor environments at night.

Additionally, the system’s adaptability to different lighting conditions opens the door to applications in other fields, such as autonomous vehicles, drones, and augmented reality (AR), where environmental lighting can often fluctuate. The ability to navigate and interact with the world, even in low-light situations, could revolutionize industries that rely on automated vision systems.

Overcoming the Challenge of Low-Light Vision

In conclusion, the research conducted by the team at the University of Córdoba marks a significant advancement in the field of machine vision. By utilizing neural networks to tackle the problem of low-light detection, they have created a system that overcomes one of the major limitations of fiducial marker-based tracking systems. This breakthrough has far-reaching implications, not just for robotics, but for any system that relies on machine vision to interact with the world around it.

With the open-source release of both the data and the code, this solution can now be readily adopted and adapted by industries around the world, marking a new era in automated object detection. Whether it’s robots working in the dark or drones navigating through challenging environments, this innovation provides a flexible, reliable, and efficient solution to one of the most persistent challenges in machine vision.

Reference: Rafael Berral-Soler et al, DeepArUco++: Improved detection of square fiducial markers in challenging lighting conditions, Image and Vision Computing (2024). DOI: 10.1016/j.imavis.2024.105313