Neuromorphic computing, a field that draws inspiration from neuroscience to replicate the structure and function of the brain, is a growing area of research that holds the promise of radically transforming how we approach computing. While it has shown great potential, for neuromorphic computing to compete effectively with conventional computing systems, it must be scaled to meet the demands of real-world applications. On January 22, a significant review article published in Nature outlines a detailed roadmap for achieving this goal. Authored by 23 researchers, including two from the University of California San Diego, the paper offers a fresh perspective on how neuromorphic systems can approach the cognitive capacity of the human brain, while matching its compact form and low power consumption.

The authors make it clear that neuromorphic computing is unlikely to have a single, all-encompassing solution but rather will require a diverse range of neuromorphic hardware tailored to different application needs. This is a critical insight, as it recognizes the complexity of mimicking the brain’s processing capabilities across various domains such as scientific computing, artificial intelligence (AI), augmented and virtual reality, wearables, smart farming, and smart cities, to name just a few.

The Promise of Neuromorphic Computing

Neuromorphic chips, designed to mimic the behavior of biological neural networks, hold great promise in revolutionizing computing. Unlike traditional computers, which process data sequentially, neuromorphic systems use parallel processing and event-driven computation. This approach enables them to execute tasks with greater energy efficiency and spatial efficiency. For example, the electricity consumption of AI systems is expected to double by 2026, highlighting the urgent need for energy-efficient alternatives. Neuromorphic computing presents a solution to this challenge, as it has the potential to outperform conventional systems in both power consumption and performance.

Neuromorphic systems function in a way that is more akin to the human brain. While conventional systems rely on centralized processors and memory, neuromorphic systems integrate memory and processing on the same chip, leading to faster and more efficient computation. As the field of AI continues to advance, the scalability and energy efficiency of neuromorphic computing could become critical in reducing the carbon footprint of increasingly complex AI models.

Key Challenges and Opportunities

The article’s authors underscore several challenges that need to be addressed before neuromorphic systems can scale effectively. One of the primary features that the authors highlight is sparsity, which is central to the functioning of the human brain. In the brain, neural connections are initially formed densely, only to be pruned selectively over time. This process, known as synaptic pruning, ensures that the brain uses its resources efficiently by retaining only the most essential connections. If this principle of sparsity can be effectively emulated in neuromorphic systems, the result could be highly efficient systems that consume less energy and occupy less physical space.

In their roadmap, the authors emphasize the need for massive parallelism and hierarchical structures to emulate the brain’s functionality. In particular, they suggest mimicking the organization of the brain’s gray matter and white matter. Gray matter is responsible for local synaptic connectivity, while white matter facilitates communication between different regions of the brain. By combining these features with high-bandwidth, reconfigurable interconnects that allow for seamless communication across chips, neuromorphic systems could achieve significant advances in both performance and scalability.

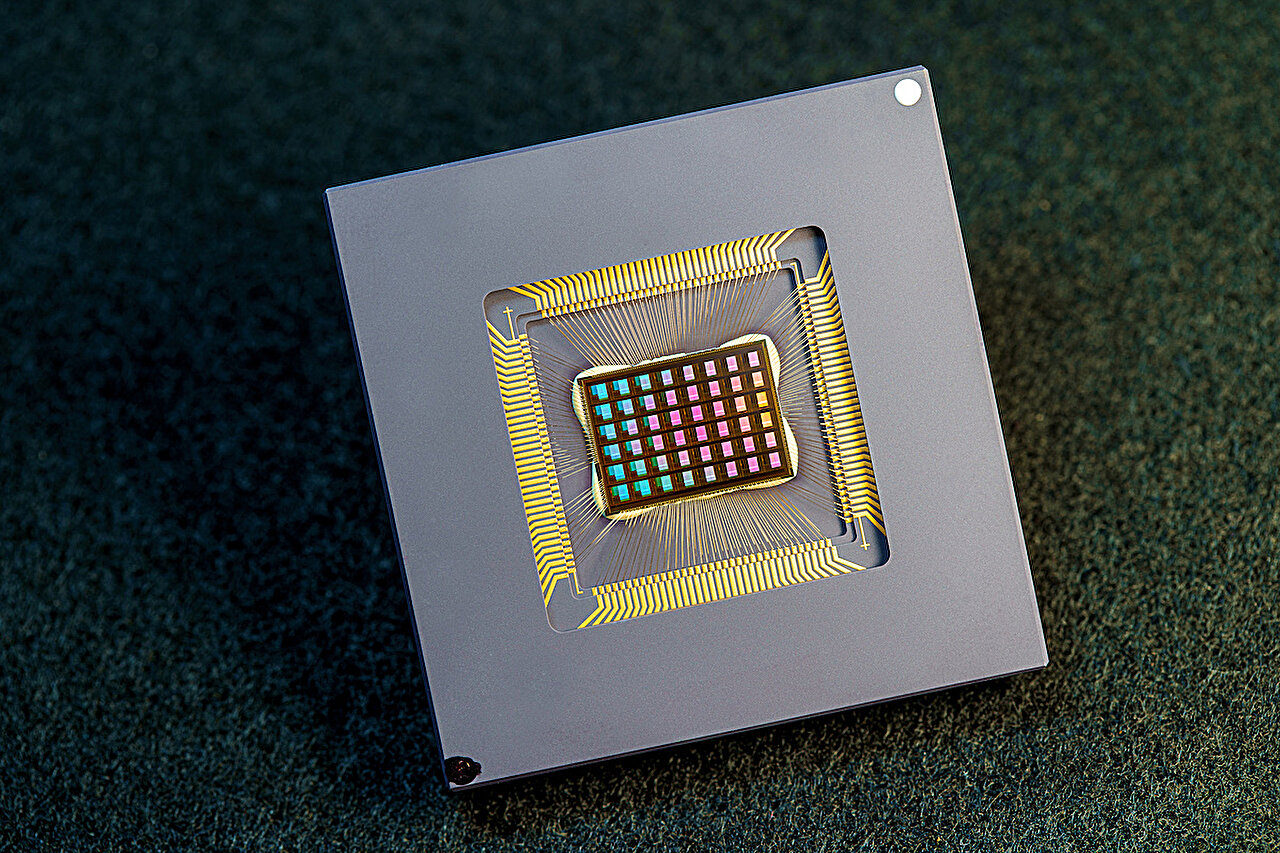

The 2022 development of the NeuRRAM chip is an example of the progress being made in this area. Designed by a team led by Gert Cauwenberghs, this neuromorphic chip demonstrated the potential for highly dynamic and versatile computing. NeuRRAM executes computations directly in memory, which drastically reduces the energy consumption compared to conventional computing systems. It can run a wide range of AI applications with remarkable efficiency, paving the way for more practical and scalable neuromorphic systems.

The Importance of Collaboration

As the field moves forward, the authors stress the importance of collaborations between academia and industry. The path to scaling neuromorphic computing systems requires the expertise of both researchers and engineers, with close collaboration being essential to translate theoretical concepts into real-world applications. Dhireesha Kudithipudi, the paper’s corresponding author and the Robert F. McDermott Endowed Chair at the University of Texas San Antonio, highlights that the development of new architectures and frameworks that can be deployed in commercial applications is a critical opportunity.

Such collaboration, reflected in the diversity of the paper’s co-authors, will play a key role in ensuring that neuromorphic computing systems can evolve in ways that meet the needs of both industries and consumers. Amitava Majumdar, director of the Division of Data-Enabled Scientific Computing at the San Diego Supercomputer Center, echoes this sentiment, noting that bringing neuromorphic resources to the national user community will help accelerate the transition from research to application.

Expanding the Reach of Neuromorphic Computing

For neuromorphic computing to reach its full potential, it must be accessible to a wider range of users, including those in fields outside of computer science. The authors argue that there needs to be a concerted effort to develop user-friendly programming languages and frameworks to make it easier for researchers and practitioners in diverse domains to work with neuromorphic systems. By lowering the barrier to entry, these efforts would foster collaboration across industries and disciplines, enabling the development of neuromorphic applications that can have a transformative impact on fields like healthcare, robotics, and more.

Future Directions: Towards Brain-Like Intelligence

The review article also looks toward the future of neuromorphic computing, suggesting that the integration of new chip technologies will bring us closer to achieving brain-like intelligence. These technologies include emerging silicon-based chips and other novel materials that could push the boundaries of what neuromorphic systems are capable of. One of the key challenges moving forward is the development of self-learning systems, which could replicate the brain’s ability to adapt and change over time. This would require not only advances in hardware but also breakthroughs in software and algorithms that allow neuromorphic systems to learn from experience, much like the brain does.

Furthermore, the article stresses that while the road to scalable neuromorphic systems is complex and multifaceted, the progress made so far shows significant promise. By building on the advances of chips like NeuRRAM and pursuing the key features outlined in the roadmap, neuromorphic computing can provide a new approach to computational systems—one that is faster, more efficient, and better aligned with the way nature solves complex problems.

Conclusion

In summary, neuromorphic computing represents a paradigm shift that could redefine how we approach computing at scale. The insights presented in the Nature review provide a clear path toward building systems that mimic the brain’s efficiency, enabling new possibilities in AI, scientific computing, healthcare, and many other fields. As the demand for more efficient and powerful computing systems grows, neuromorphic computing could offer a viable solution that meets these challenges while also providing a glimpse into the future of brain-like, self-learning computing systems. With strong collaboration between academia and industry and the continued development of cutting-edge technologies, neuromorphic computing may soon become a cornerstone of next-generation computational systems.

Reference: Dhireesha Kudithipudi et al, Neuromorphic computing at scale, Nature (2025). DOI: 10.1038/s41586-024-08253-8