Quantum computing stands on the precipice of revolutionizing how we solve problems that are intractable for classical computers. One of the major goals in this domain is achieving a quantum advantage—the point at which a quantum computer outperforms classical systems on specific tasks. Researchers have been focusing their efforts on demonstrating this advantage through challenges such as quantum random sampling, a task where a quantum system generates random samples from a probability distribution, something that is traditionally very hard for classical computers to do efficiently. This article explores a groundbreaking achievement made by researchers at Universität Innsbruck, Freie Universität Berlin, and other institutes, who have proposed a new method to verify quantum random sampling efficiently and in a way that brings quantum advantage closer to reality.

Understanding Quantum Advantage

The concept of a quantum advantage has become one of the defining benchmarks for quantum computing research. Unlike classical computers, which process information in binary bits (0s and 1s), quantum computers use quantum bits (qubits). Qubits leverage the principles of quantum mechanics, such as superposition and entanglement, enabling quantum computers to represent and manipulate a vast amount of information simultaneously. The goal is to perform specific types of tasks far faster or more efficiently than the most advanced classical supercomputers.

One of the primary tasks that researchers are keen to leverage to demonstrate quantum advantage is quantum random sampling. This task involves drawing random samples from a probability distribution, a problem for which classical computers struggle, especially as the complexity grows. While classical algorithms require increasingly prohibitive amounts of time and resources to perform these tasks, quantum computers, with their ability to process superpositions and entanglements, may be able to perform random sampling far more efficiently.

The Challenge of Verifying Quantum Random Sampling

Quantum random sampling might sound like a simple task, but when it comes to proving that a quantum computer has indeed solved it correctly, things become much more complicated. Verification of these results can often be done classically, but for large quantum systems, classical methods may no longer suffice or might be computationally prohibitive.

Traditional verification approaches use techniques like cross-entropy benchmarking or other classical checks to compare quantum outcomes to expected distributions. However, for larger systems, these methods often do not scale well. The difficulty lies in the “classical hardness” of verifying outcomes that arise from quantum randomness. Without direct classical tools to effectively check the results, the quantum computation’s validity remains in question. This issue becomes particularly relevant as quantum computers continue to scale up, making it more difficult to check whether a system has performed the task correctly.

A New Approach: Measurement-Based Quantum Computation

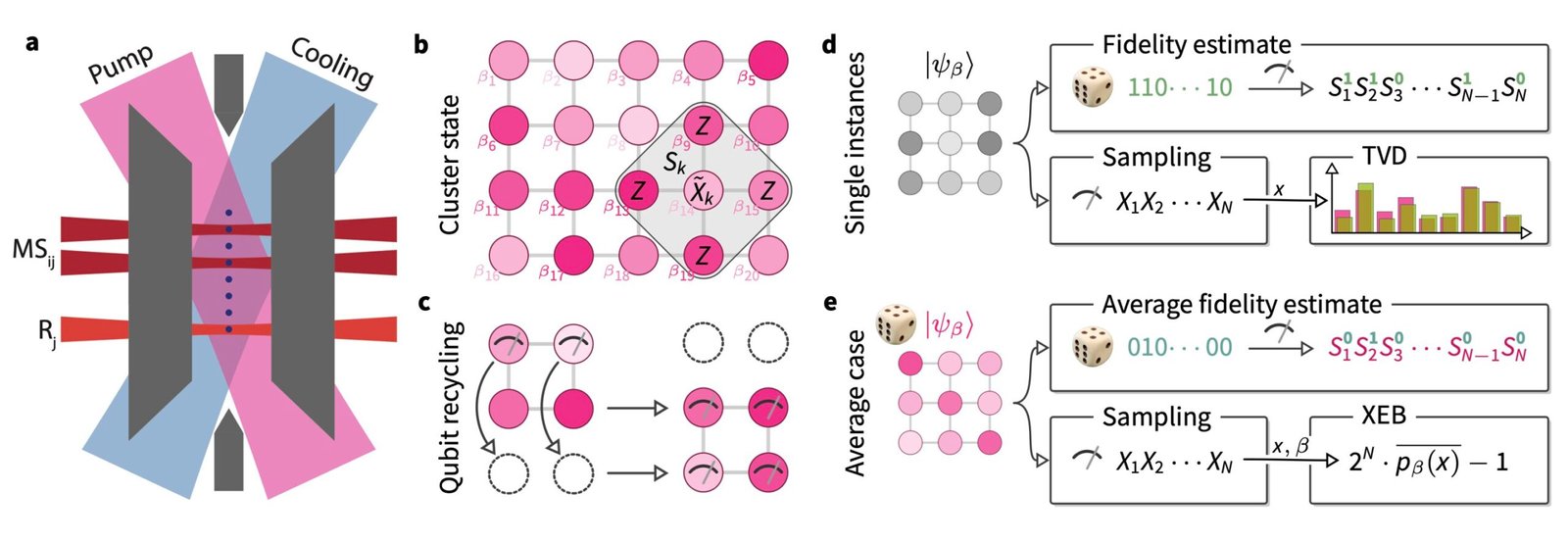

In response to the challenges of verifying quantum random sampling, a team of researchers has introduced a novel protocol for verification based on the measurement-based model of quantum computation (MBQC). This model diverges from traditional gate-based quantum computing. It relies on performing measurements on quantum entanglement states, where the processing of information depends not on unitary operations applied to individual qubits but rather on entangled quantum states prepared at the outset.

The research team led by Jens Eisert and colleagues from universities in Innsbruck, Berlin, and other research institutes recently outlined this verification protocol in the journal Nature Communications. Their work demonstrated a method of verifying quantum random sampling using a trapped-ion quantum processor. This new technique enables the verification of random sampling outcomes without relying heavily on resource-intensive classical computation.

As explained by Jens Eisert, the inspiration for this approach grew from his curiosity about the potential computational tasks that quantum systems might solve more efficiently than classical computers, and what would later become known as “quantum advantages.” Eisert and his collaborators, including experimentalists from Innsbruck and Berlin, recognized that the realization of quantum advantage in quantum random sampling was just around the corner. They set out to design an experimental setup that could demonstrate this.

Experimenting with Trapped-Ion Quantum Processors

The experiment utilized a trapped-ion quantum processor, one of the most powerful platforms for building quantum computers today. In this platform, individual ions, which serve as qubits, are confined using electromagnetic fields. Each ion can be manipulated using laser pulses, and these manipulations allow scientists to control the state of the qubits with extreme precision.

To demonstrate the protocol, the researchers first prepared a cluster state, which plays a crucial role in measurement-based quantum computing. The cluster state is a highly entangled state that forms the foundation for quantum computations performed using MBQC. These entangled quantum states are inherently difficult to describe using classical computations, which further enhances the advantage offered by quantum computers.

For their experiment, the researchers prepared a 4×4 cluster state using 16 qubits (individual trapped ions). They then performed measurements on this state to extract data for random sampling. Since each measurement collapsed the quantum state in a way that was linked to previously entangled qubits, the results of these measurements represented a sample from the distribution represented by the quantum state.

One of the key insights of the protocol developed by the team is that the quality of the quantum state could be verified efficiently, without requiring intensive classical computations. To verify the sampling was correct, the researchers estimated the fidelity of the cluster state by comparing their measured outcomes with known expectations. This approach allowed them to estimate the reliability of the results and confirm that the quantum sampling had been performed successfully.

Key Findings and Contributions

The researchers successfully demonstrated their verification protocol and observed that the random samples produced by the processor were in very close alignment with those they had predicted. This alignment demonstrates the quantum computer’s effectiveness at random sampling and provides confidence in the performance of the quantum system.

Thomas Monz and Martin Ringbauer, experimental team leads from Innsbruck, highlighted how the use of trapped ions and the ability to recycle qubits improved the efficiency and scalability of their experiment. Their approach brought further sophistication to the understanding of how quantum systems could be utilized for computational tasks beyond the reach of classical methods.

“We could verify the correctness of the state preparation and the sampling through fidelity estimation, providing a clearer picture of how successful the quantum process had been,” Monz explained. The researchers also compared their technique with conventional methods like cross-entropy benchmarking, finding that their protocol required fewer resources.

The ability to verify quantum random sampling through this new methodology allows researchers to better understand how to test the limits of classical and quantum computers and may have broad implications in the field of quantum computing. Understanding when quantum advantage kicks in, particularly for random sampling tasks, is an essential step in advancing the development of practical quantum computing platforms.

What Comes Next for Quantum Computing?

The success of this experiment paves the way for future advancements in quantum computing research. This study is significant both from a technological and conceptual perspective. Technologically, the use of trapped ions as qubits—and recycling them for multiple operations—represents an important step toward improving quantum computer efficiency. Conceptually, the team’s findings also suggest that quantum experiments can be verified efficiently, even when classical methods of validation fail.

This research not only shows promise for quantum computing’s role in random sampling but also provides a blueprint for verification protocols that could be adapted for testing other types of quantum computations. As quantum computers grow in size and complexity, being able to verify their correctness with minimal classical resources will become crucial.

“By developing and testing this protocol on a trapped-ion quantum processor, we made a significant contribution toward creating more efficient and reliable quantum computing systems,” said Eisert. “Moreover, we hope this work will be a stepping stone for broader efforts to make quantum computing platforms more resilient and robust against errors.”

In conclusion, the successful verification of quantum random sampling, as demonstrated by this international research team, marks an important milestone in the race to demonstrate quantum advantage. While much remains to be done, this breakthrough may inspire further progress in designing quantum systems that can outperform classical computers in increasingly complex tasks. The convergence of theory, experimentation, and engineering is driving the future of quantum computing, and the insights from this work will likely have wide-reaching implications for the field.

Reference: Martin Ringbauer et al, Verifiable measurement-based quantum random sampling with trapped ions, Nature Communications (2025). DOI: 10.1038/s41467-024-55342-3.