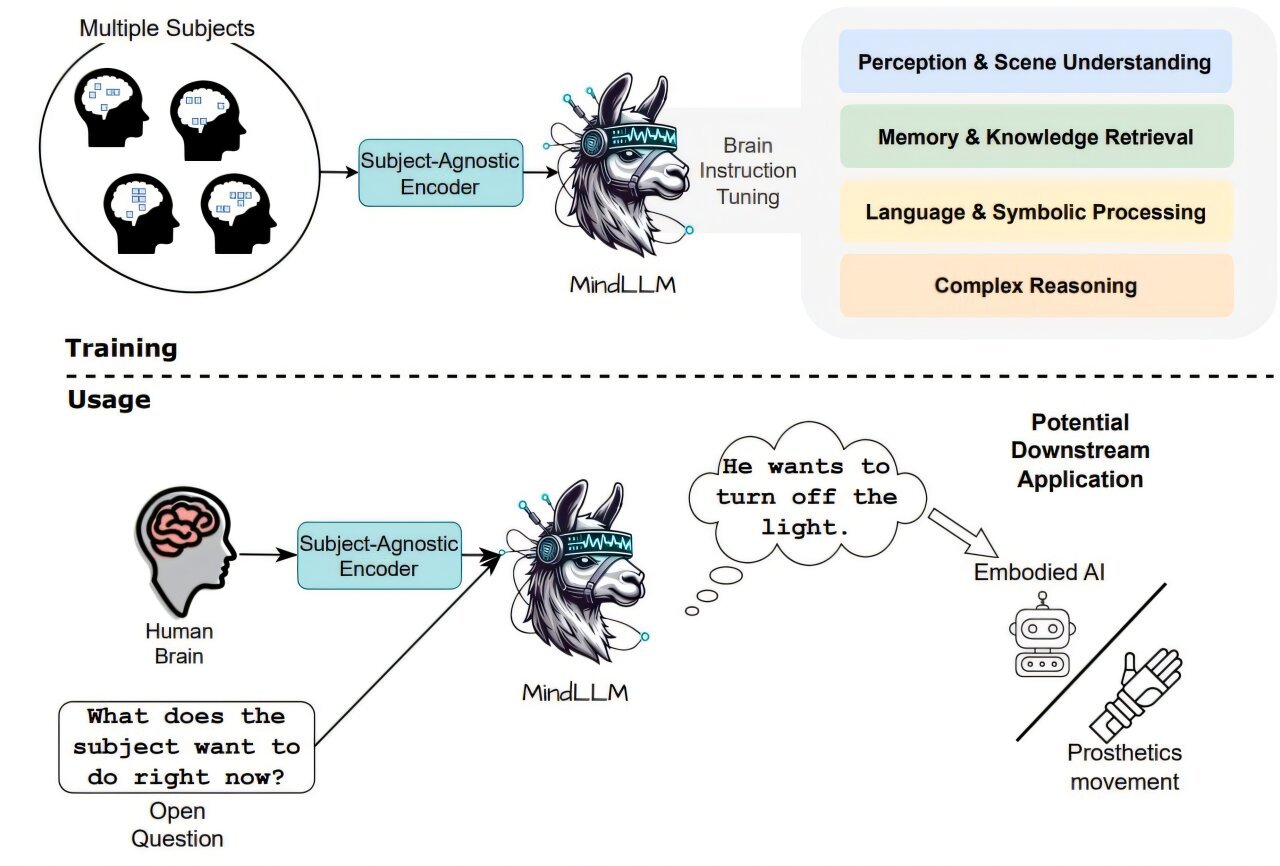

Researchers from Yale University, Dartmouth College, and the University of Cambridge have developed a groundbreaking model called MindLLM, which decodes functional magnetic resonance imaging (fMRI) signals into natural language. This subject-agnostic model represents a significant leap in the ability to translate brain activity into text, outperforming previous methods with impressive improvements across various benchmarks. MindLLM’s potential applications span neuroscience research, brain-computer interfaces (BCIs), and medical diagnostics, promising to transform how we interact with brain data.

Key Advancements of MindLLM

MindLLM is designed to address several challenges in the field of brain signal decoding. Previous models struggled with predictive performance, limited task variety, and poor generalization across subjects. For instance, many existing approaches rely heavily on subject-specific parameters, which restrict their ability to generalize across individuals. MindLLM, by contrast, leverages an innovative neuroscience-informed attention mechanism integrated into a large language model (LLM), which dramatically improves its performance and generalizability.

The results are striking: MindLLM shows a 12.0% improvement in downstream tasks, a 16.4% increase in unseen subject generalization, and a 25.0% boost in novel task adaptation compared to prior models like UMBRAE, BrainChat, and UniBrain. These improvements highlight MindLLM’s superior ability to decode brain activity into text across diverse contexts, making it one of the most versatile and powerful models developed for this purpose.

Understanding the Model Design

MindLLM’s design comprises two key components: an fMRI encoder and an LLM. To decode brain activity into text, the system must first process fMRI data, which is collected from brain scans that divide the brain into tiny 3D units called voxels. These voxels are essentially like 3D pixels that represent brain activity in different regions. However, the distribution of active voxels can vary significantly across individuals, presenting a challenge when trying to create a universal model that works for all subjects.

The challenge lies in the fact that brain structures are not identical across individuals. As a result, the number and arrangement of active voxels can vary, with some subjects showing between 12,682 and 17,907 voxels in the study. This variation in voxel distribution requires different input dimensions for each subject, complicating the decoding process. However, MindLLM overcomes this issue through a neuroscience-informed attention mechanism in the fMRI encoder.

By integrating a neuroscience-based approach to activity mapping, MindLLM can handle these variations in input dimensions and accommodate the differing brain structures across individuals. This attention mechanism separates the functional information of each voxel from its raw fMRI values, allowing the model to leverage existing neuroscience knowledge and maintain consistency across diverse subjects. This modification leads to improved generalization, allowing MindLLM to produce more accurate results across different individuals.

Brain Instruction Tuning (BIT) for Enhanced Semantic Understanding

MindLLM’s design also incorporates a novel approach called Brain Instruction Tuning (BIT). BIT enhances the model’s ability to decode semantic representations from fMRI signals by utilizing large-scale fMRI datasets. These datasets include fMRI recordings from multiple individuals who are shown the same stimuli (e.g., images or videos). Along with these recordings, textual annotations of the stimuli are also provided. By training on this multi-subject fMRI data and its associated textual annotations, BIT allows the model to develop a deeper understanding of how brain activity corresponds to specific concepts and meanings.

This semantic enhancement is crucial for improving the model’s interpretability and overall performance. In practical terms, BIT enables MindLLM to more effectively map neural patterns to specific ideas or concepts, making it more accurate in tasks such as brain captioning, question answering, and reasoning.

Improved Performance and Generalization

MindLLM was evaluated using comprehensive fMRI-to-text benchmarks derived from the Natural Scenes Dataset (NSD), a widely used dataset in fMRI research. The study tested MindLLM’s ability to decode brain activity into meaningful outputs, using tasks such as generating captions for brain activity, answering questions about the stimuli presented to the subjects, and reasoning about the data.

The results were impressive. MindLLM demonstrated a 16.4% improvement over previous subject-agnostic models in terms of generalization to new subjects. This means that MindLLM can more effectively handle variations between individuals, making it a more robust tool for widespread applications. Moreover, the model showed a 25.0% increase in novel task adaptation, suggesting that MindLLM is better equipped to tackle a wide variety of tasks with little to no additional fine-tuning.

MindLLM’s superior adaptability and generalization capabilities make it ideal for real-world applications, particularly in areas where subjects may differ, such as in personalized healthcare, neuroscientific research, and brain-computer interfaces (BCIs).

Beyond Captions: Handling Complex Tasks

While many earlier models focused primarily on generating captions from fMRI signals related to visual stimuli, MindLLM breaks new ground by expanding its capabilities to handle a wide range of tasks. This includes tasks that involve symbolic language processing, knowledge retrieval, and complex reasoning.

MindLLM’s ability to integrate different types of tasks—including memory-based challenges such as retrieving descriptions of previously seen images—further strengthens its applicability to cognitive neuroscience. Additionally, its open-ended question-answering capabilities extend its potential in medical and research settings, where more nuanced interactions with brain data are required.

These advanced capabilities are made possible through MindLLM’s integration with standardized neuroscientific atlases, such as those by Glasser and Rolls, which provide functional priors to help the model differentiate between voxel positions and activity values. By incorporating these resources, the model maintains both subject generalization and neuroscientific integrity, ensuring that its decoding process aligns with current understandings of brain function.

Future Directions: Temporal Modeling and Real-Time Decoding

While current implementations of MindLLM focus on processing static fMRI snapshots, which provide a snapshot of brain activity at a given moment, future advancements may incorporate temporal modeling techniques to capture how brain activity evolves over time. This could involve the use of recurrent architectures or sequential attention mechanisms, which would allow the model to track the progression of thoughts and better understand the dynamic nature of brain activity.

Such an advancement would be particularly valuable in applications like neuroprosthetics, where real-time decoding of brain signals is crucial for controlling external devices, or mental state tracking, which could have profound implications for mental health monitoring and cognitive rehabilitation.

Impact on Brain-Computer Interfaces and Neuroscience

The potential applications of MindLLM are vast, especially in fields like brain-computer interfaces (BCIs), where the ability to decode brain activity into meaningful commands could allow individuals with motor impairments to control devices or interact with their environment using only their thoughts. In addition, MindLLM could provide real-time insights into cognitive states, opening up new possibilities for monitoring and enhancing mental health.

Moreover, MindLLM’s ability to provide interpretable insights into how brain activity translates into semantic information is invaluable for advancing neuroscientific research. Its robust performance and high level of generalization make it an essential tool for studying the relationships between brain function and cognition, facilitating new discoveries in areas like language processing, perception, and decision-making.

Conclusion

MindLLM represents a significant leap forward in the decoding of brain activity into natural language. By integrating a neuroscience-informed attention mechanism with a large language model, MindLLM overcomes the limitations of previous approaches, offering superior performance in generalization, novel task adaptation, and semantic understanding. Its potential to transform both neuroscientific research and brain-computer interface applications is immense, and future developments in temporal modeling and real-time decoding could further enhance its capabilities. MindLLM is poised to become a foundational tool for advancing our understanding of the brain and revolutionizing how we interact with neural data.

Reference: Weikang Qiu et al, MindLLM: A Subject-Agnostic and Versatile Model for fMRI-to-Text Decoding, arXiv (2025). DOI: 10.48550/arxiv.2502.15786