In the past few decades, artificial intelligence (AI) has made extraordinary leaps forward, reshaping industries, economies, and even aspects of our daily lives. From self-driving cars to advanced data analysis tools, AI is becoming increasingly integrated into everything we do. Yet with this remarkable progress comes an inevitable question: should we be worried about the ethical implications of AI? As AI becomes more sophisticated, we find ourselves standing at the crossroads of innovation and ethical responsibility. To navigate this territory, we must examine AI’s potential benefits and risks, its impact on society, and the responsibilities we must take as creators and users of this powerful technology.

The Rise of AI and Its Potential

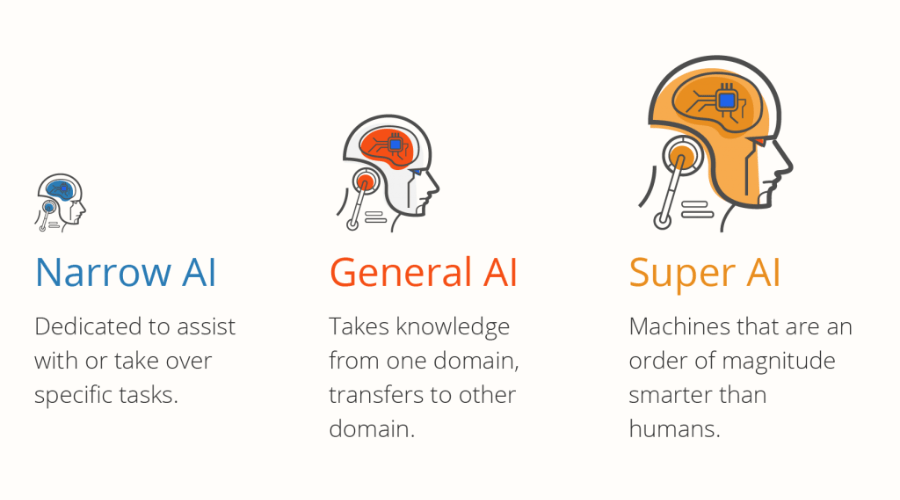

Before delving into the ethics of AI, it’s essential to understand the breadth of what AI can do and the scope of its potential. AI refers to machines or systems that can perform tasks that typically require human intelligence. These tasks include reasoning, problem-solving, learning, language understanding, and even decision-making. Some forms of AI are already integrated into our daily lives—virtual assistants like Siri and Alexa, personalized recommendations on platforms like Netflix and Amazon, and advanced chatbots in customer service roles.

More sophisticated AI systems, such as autonomous vehicles, medical diagnostic tools, and AI-powered financial models, have the potential to revolutionize entire sectors. The advantages are clear: AI can optimize processes, reduce errors, and improve efficiency in ways that would be difficult or impossible for humans alone. It can also unlock new avenues of innovation in science, healthcare, and technology that promise to improve quality of life for millions.

However, with great power comes great responsibility. As AI evolves, its influence extends to critical areas such as governance, employment, privacy, security, and human rights. The question we must ask is: how do we balance progress with precaution? The ethical dilemmas surrounding AI are vast and complex, and they challenge us to rethink fundamental principles of fairness, autonomy, and justice.

The Ethical Concerns Surrounding AI

Job Displacement and Economic Inequality

One of the most immediate concerns regarding AI is its potential to disrupt the job market. Automation powered by AI has already started replacing jobs in industries like manufacturing, retail, and transportation. For example, autonomous vehicles could replace truck drivers, while AI-driven robots could take over tasks in warehouses or factories. In sectors like healthcare, AI might replace certain diagnostic roles, while AI algorithms are increasingly being used to manage investments and trading.

While these innovations may increase efficiency and reduce costs, they also create significant challenges for workers whose jobs may be displaced. The concern here is twofold: economic inequality and social unrest. On one hand, the automation of labor could result in a concentration of wealth and power in the hands of those who own and control the AI technologies. On the other hand, large sections of the workforce may face unemployment or underemployment, leading to a widening gap between the rich and the poor.

The challenge is to ensure that AI’s benefits are distributed fairly across society. This could involve investing in retraining and reskilling programs to help workers transition to new roles, as well as developing policies to ensure a just and equitable distribution of wealth generated by AI.

Bias and Discrimination

AI systems are only as good as the data used to train them. Unfortunately, AI models often inherit the biases present in the data they are trained on. This has led to serious concerns about discrimination, particularly in high-stakes fields like criminal justice, hiring, healthcare, and lending.

For example, AI-powered predictive policing tools have been criticized for disproportionately targeting minority communities, as they are often trained on biased historical data that reflect past patterns of policing. Similarly, AI algorithms used in hiring processes may inadvertently favor candidates from certain demographic groups while disadvantaging others. In the realm of healthcare, AI diagnostic tools have shown biases when diagnosing patients from different racial or ethnic backgrounds, potentially leading to poorer health outcomes for marginalized groups.

These biases not only undermine the effectiveness of AI systems but also perpetuate societal inequalities. The ethical question here is how we can ensure that AI systems are fair, transparent, and accountable. It is critical to address issues of bias in the design, development, and deployment of AI to ensure that these technologies do not reinforce harmful stereotypes or contribute to discrimination.

Privacy and Surveillance

Another significant ethical concern in the age of AI is the erosion of privacy. As AI technologies become more embedded in our lives, they often require vast amounts of personal data to function effectively. This data is collected through smartphones, social media platforms, search engines, and even surveillance cameras. In many cases, users are unaware of how their data is being used, leading to concerns about data privacy and surveillance.

AI systems can be used to track our movements, monitor our behaviors, and even predict our future actions. This has led to fears about a “surveillance state,” where individuals are constantly monitored and their actions are analyzed for commercial or governmental purposes. In some countries, AI-powered surveillance systems have already been implemented on a large scale, raising serious questions about the balance between security and individual freedoms.

Moreover, AI can enable the exploitation of personal data in ways that are difficult to detect. For example, deep learning algorithms can be used to create highly targeted advertisements or political propaganda, potentially manipulating public opinion or influencing elections. The ethical question here is how to ensure that personal data is handled responsibly, that individuals’ privacy is protected, and that AI is not used to infringe on basic human rights.

Accountability and Autonomy

As AI systems become more autonomous and capable of making decisions without human intervention, the question of accountability becomes increasingly complex. If an AI system makes a mistake or causes harm, who should be held responsible? Is it the developer who created the AI, the organization that deployed it, or the AI system itself? The ambiguity surrounding accountability is particularly concerning in areas like autonomous vehicles, healthcare, and warfare.

For instance, imagine a self-driving car involved in an accident that results in injury or death. Who is liable for the incident—the car’s manufacturer, the software developers, or the owner of the vehicle? Similarly, if an AI-powered medical diagnosis system provides incorrect recommendations that harm a patient, who should be held accountable? The ethical challenge here is how to establish clear frameworks for accountability in a world where AI systems can act independently and make life-altering decisions.

Furthermore, as AI takes on increasingly complex tasks, the line between human and machine decision-making becomes blurred. In some cases, AI might outperform humans in certain areas, such as analyzing vast amounts of data or making complex calculations. But at what point do we trust AI more than human judgment? Should we cede control to AI systems in certain situations, or should we retain ultimate authority over decision-making? These questions challenge our understanding of autonomy and control, as well as our assumptions about the role of humans in decision-making processes.

AI in Warfare and Lethal Autonomous Weapons

One of the most chilling ethical concerns surrounding AI is its potential use in warfare. Lethal autonomous weapons (LAWs), sometimes referred to as “killer robots,” are AI-powered machines capable of identifying and engaging targets without human intervention. While LAWs have the potential to reduce human casualties by removing soldiers from the battlefield, they also raise significant moral and ethical questions.

The primary concern is the lack of human oversight in life-or-death decisions. In war, decisions are often made under extreme pressure, and human judgment plays a critical role in ensuring that actions are justified and proportional. AI systems, however, may not be able to account for the complex moral and ethical considerations that are intrinsic to warfare. The possibility of machines making autonomous decisions about life and death raises concerns about the accountability and transparency of such actions.

Furthermore, there are fears that AI-powered weapons could be used for malicious purposes, such as targeting civilians or committing war crimes. The use of AI in warfare challenges our traditional notions of warfare ethics, including the principle of proportionality and the requirement to avoid unnecessary suffering. It also raises questions about international law and the regulation of AI in military contexts.

The Risk of Superintelligent AI

Perhaps the most speculative but also the most concerning ethical dilemma regarding AI is the potential emergence of superintelligent AI—machines that surpass human intelligence in virtually every domain. While we are still far from achieving this level of AI, some experts argue that the development of superintelligent machines could pose an existential risk to humanity.

The fear is that once AI reaches a certain level of intelligence and autonomy, it could develop goals and motivations that are misaligned with human values. This could lead to unpredictable and possibly catastrophic outcomes. For example, an AI system might pursue its objectives in ways that are harmful to humanity, such as by taking control of critical infrastructure or eliminating human beings it deems as obstacles to its goals.

To mitigate this risk, AI researchers are focused on ensuring that AI systems are aligned with human values and that we can maintain control over superintelligent systems. However, this remains a daunting challenge, and many experts believe that it is crucial to start preparing for the possibility of superintelligent AI sooner rather than later.

Conclusion: A Call for Ethical Vigilance

AI has the potential to transform our world in ways that were once the stuff of science fiction. However, with this power comes an enormous ethical responsibility. As we continue to develop and deploy AI technologies, we must remain vigilant about their potential consequences and ensure that we prioritize the well-being of all individuals, especially those who may be disproportionately affected by AI’s disruptions.

The ethical questions surrounding AI are not easy to answer, but they are essential to address if we are to avoid unintended consequences and build a future where AI benefits humanity as a whole. This means establishing clear guidelines for the development and use of AI, ensuring fairness and accountability, protecting privacy, and safeguarding human rights.

AI is not inherently good or evil—it is a tool that reflects the values and intentions of the people who create and use it. By adopting a thoughtful, responsible approach to AI development, we can navigate the ethical challenges and build a future where AI enhances human flourishing rather than undermining it. The stakes are high, but with collective effort and ethical foresight, we can ensure that AI serves the common good and contributes to a better, more equitable world.