Uncovering the relationship between the structure of neural networks and their function—particularly how the brain’s connectivity influences its activity—is a fundamental question that underpins much of modern biology and neuroscience. Investigating this relationship directly in animal brains is particularly challenging because of the extraordinary complexity of neural connections, coupled with the invasive surgeries required to access and manipulate the brain’s internal structures. In recent years, the use of lab-grown neurons, which can be artificially controlled to form specific connections, has emerged as a promising alternative to animal testing. These in vitro systems offer a less invasive and more controllable means of studying the brain’s neural activity, with the potential to reveal crucial insights into both basic neuroscience and advanced applications in medicine and technology.

At the heart of this investigation lies the brain’s dynamic nature. The brain is an incredibly complex organ, and one of the challenges of studying it is its ability to adapt, learn, and change over time. Neurons—cells that transmit electrical and chemical signals—form the basis of this adaptability. The brain’s plasticity, or its capacity to rewire and strengthen or weaken synaptic connections in response to stimuli and experience, makes understanding its functions particularly difficult. As lead author Nobuaki Monma points out, “The brain is difficult to understand, in part, because it is dynamic—it can learn to respond differently to the same stimuli over time based on a number of factors.”

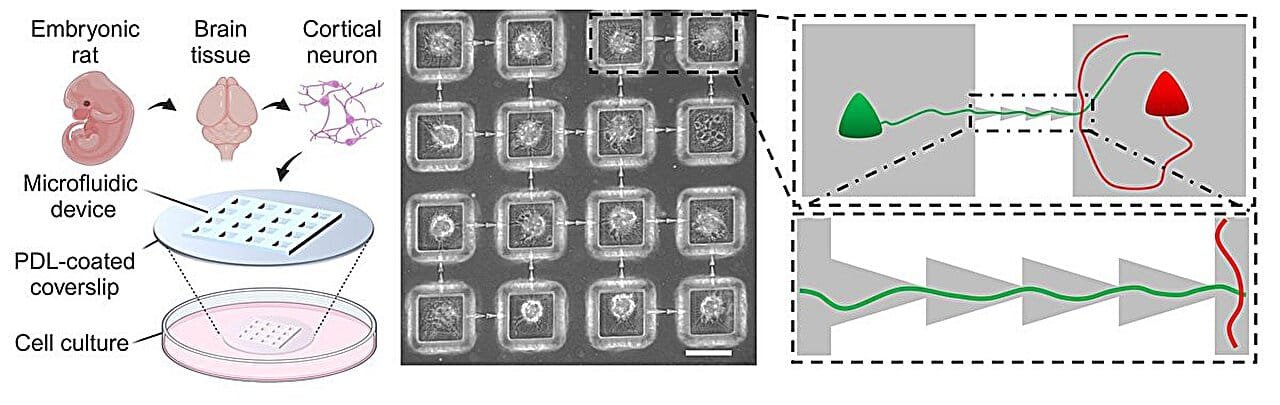

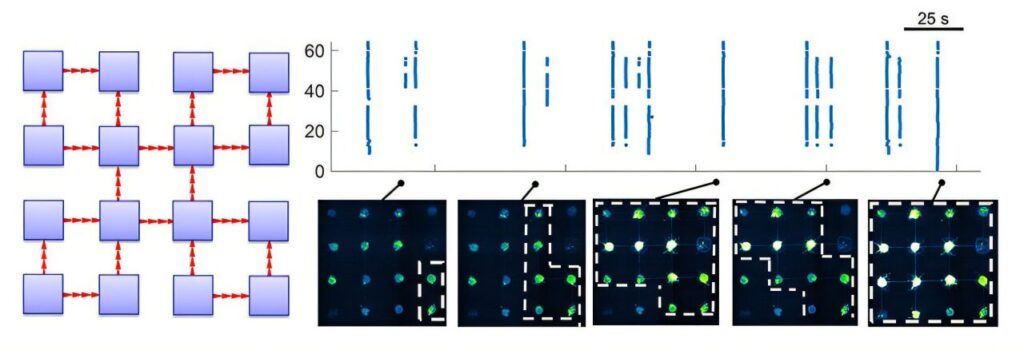

To probe these complex mechanisms more effectively, researchers are turning to laboratory-grown neuronal networks. These systems allow for a high degree of control over the experimental conditions and the way the neurons are connected. By fabricating networks that mimic the modular connectivity observed in the nervous systems of animals, researchers can better model how different parts of the brain work together. In this recent study, the research team used microchannels to embed directional connections between neural modules, mimicking the directional flow of information in the brain. This architecture was designed in a way that avoided excessive excitatory reactions, a common issue in neural studies, by ensuring that the connections were made in a feedforward manner.

The researchers then used a technique called calcium imaging, which allows for the visualization of neuronal activity in real-time by detecting changes in calcium ion concentration within neurons. Calcium ions are released when neurons fire, so this imaging technique provides a way to track the spontaneous activity of the neural networks. The team observed that the networks incorporating directional connections exhibited more complex patterns of activity compared to networks that lacked this directional structure. This finding supports the hypothesis that the directionality of neural connections plays a key role in generating more sophisticated, dynamic brain activity.

The research team also developed two mathematical models to explain the complex behaviors they observed. These models were designed to shed light on the underlying network mechanisms driving the biological activity of the neurons. By simulating different configurations of neural networks, the models helped the team identify patterns of modularity and connectivity that fostered greater dynamical complexity in the neuronal activity. The interplay between modular structures—where different groups of neurons are connected in specific ways—and the directional flow of signals seemed to be central to producing these more intricate patterns.

“The findings of this study are expected not only to deepen our fundamental understanding of neuronal networks in the brain, but also to find applications in fields such as medicine and machine learning,” says Associate Professor Hideaki Yamamoto. These findings have broad implications beyond basic biology. For example, the study of modular and directional connectivity in lab-grown neurons could contribute to advances in the development of brain-machine interfaces and neural prosthetics. Additionally, understanding these neural mechanisms could lead to new therapeutic strategies for treating neurological disorders, including conditions where connectivity and neuronal activity are disrupted, such as in Alzheimer’s disease or epilepsy.

Perhaps most intriguingly, the work holds promise for the field of artificial intelligence and machine learning. One of the long-standing goals of AI research has been to design neural networks that replicate the functioning of biological brains. By incorporating insights from biological systems, researchers hope to create more biologically plausible artificial neural networks—systems that are more efficient, adaptive, and capable of solving complex problems. This could have profound implications in areas such as autonomous systems, data analysis, and even decision-making processes that mimic human cognition.

The research is also part of a growing effort to create in-vitro models that can serve as stand-ins for studying the brain’s connectivity in a controlled laboratory environment. While current animal models are invaluable in many ways, they are limited by their invasiveness and the complexity of interpreting results across species. In-vitro models using lab-grown neurons provide a clearer window into how neurons function and interact with one another, without the ethical concerns and limitations associated with live animal testing. This approach could lead to faster advancements in neuroscience, as researchers refine these models to more accurately replicate the human brain’s intricate network of connections.

Furthermore, the theoretical advancements from this research could eventually be applied to modeling larger-scale networks, providing insights into brain-wide connectivity—the so-called “connectome.” The human connectome represents the vast network of neural connections that link different regions of the brain, and understanding how these connections influence brain function could be a critical step toward deciphering how the brain works as a whole. By developing more precise and effective methods for mapping the brain’s connections, researchers will be able to study complex cognitive functions, such as learning, memory, decision-making, and emotional regulation, in much greater detail.

As our understanding of neuronal networks deepens, the implications are far-reaching. The more we learn about the brain’s structure and function, the better equipped we will be to develop therapies for neurological disorders, improve brain-computer interfaces, and design more powerful and adaptable artificial intelligence systems. As the researchers behind this study note, their findings hold promise for a range of applications in both basic science and real-world technology.

Reference: Nobuaki Monma et al, Directional intermodular coupling enriches functional complexity in biological neuronal networks, Neural Networks (2024). DOI: 10.1016/j.neunet.2024.106967