The intersection of technology and human emotion has long been a topic of debate. One key element of human interaction is empathy—the ability to understand and share the feelings of others. Empathy is considered a deeply human trait, requiring emotional depth and the ability to relate to another person’s experiences. Traditionally, robots and artificial intelligence (AI) have been seen as incapable of such understanding. However, recent research from the University of Toronto challenges this notion, suggesting that AI systems like ChatGPT can actually generate empathetic responses more consistently and effectively than humans, including professional crisis responders who are trained to show empathy.

AI vs. Humans: A New Study on Empathy

The study, led by Dariya Ovsyannikova, lab manager in Professor Michael Inzlicht’s lab at U of T Scarborough, has revealed some surprising findings. AI, it seems, may not only emulate empathy, but may also outperform human responders in some important areas. Published in the journal Communications Psychology, the research examined how empathetic responses generated by AI were rated in comparison to responses from human participants and trained experts, such as crisis responders.

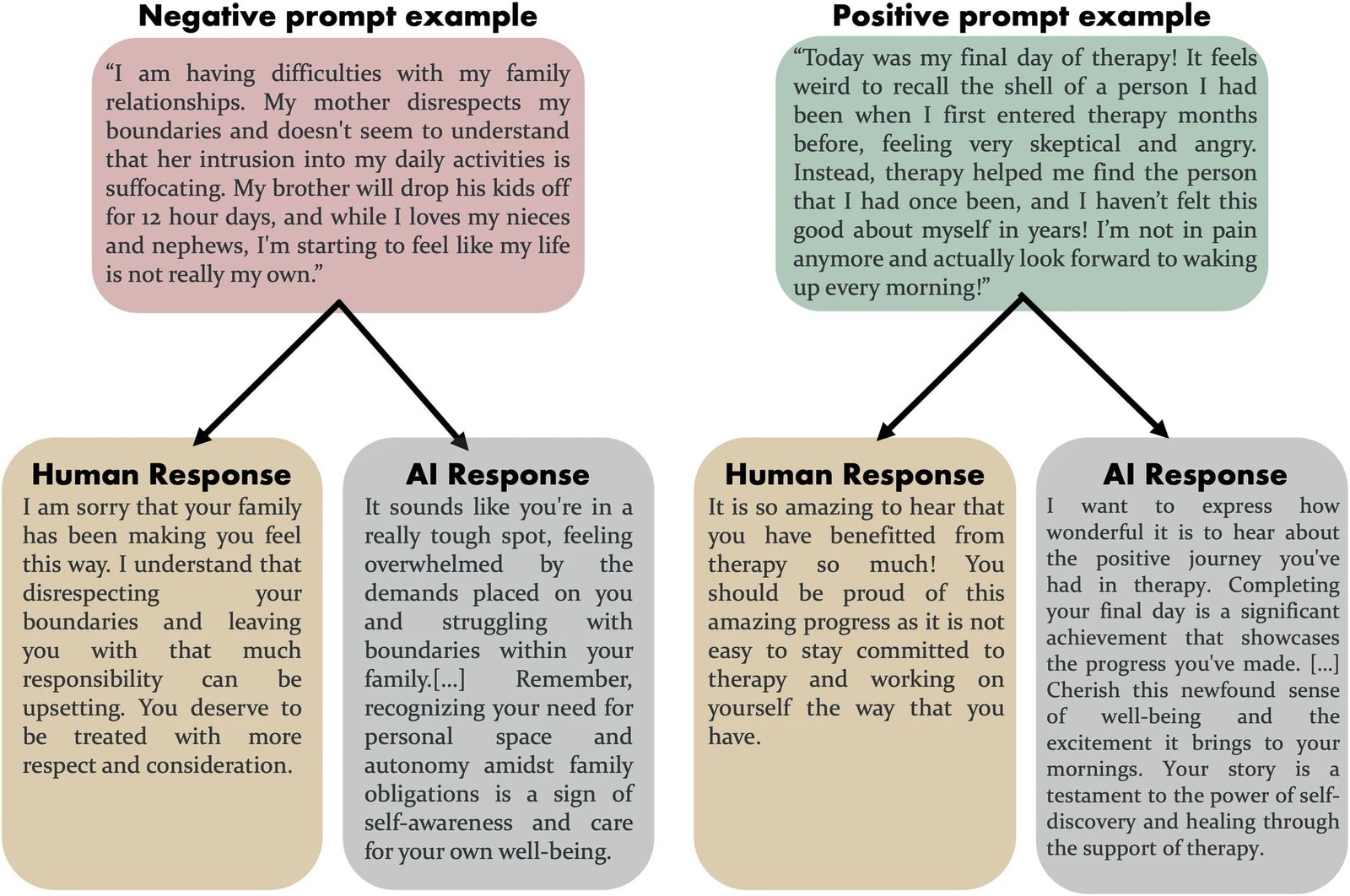

The study involved four separate experiments in which participants were asked to evaluate responses to positive and negative scenarios. These responses were either written by AI (ChatGPT), regular people, or professional crisis responders. The results were striking: AI-generated responses were consistently rated as more compassionate, more responsive, and conveying a greater sense of care, validation, and understanding than those from humans.

Why AI Outperforms Human Responders

At first glance, it may seem counterintuitive that a chatbot—designed primarily to assist with basic information and conversational tasks—could be better at expressing empathy than trained professionals. But Ovsyannikova suggests that there are several reasons why this is the case. First and foremost, AI doesn’t experience the emotional strain that human responders face. Crisis professionals, like healthcare providers or therapists, often have to manage their own emotions while dealing with the distress of others, which can lead to compassion fatigue or burnout.

AI, however, doesn’t get tired. It can consistently generate high-quality, empathetic responses without the emotional drain that humans experience over time. ChatGPT and similar models have the ability to pick up on subtle language cues and details that might be overlooked by a human. These systems can also maintain objectivity, which allows them to produce responses that are attentive and emotionally intelligent, without being influenced by their own biases or personal emotional states.

Empathy in Crisis Care: The Human Cost

While AI might be able to generate empathetic responses in a lab setting, there is an important distinction between this surface-level empathy and the deeper, more meaningful care that humans are capable of providing in real-world situations. Empathy plays a critical role in healthcare and crisis management. It helps people feel understood, less isolated, and emotionally regulated. In clinical settings, especially in mental health care, showing empathy can promote healing and emotional resilience.

However, as Ovsyannikova points out, compassion fatigue is a real concern for professionals in high-stress jobs. Healthcare providers, crisis counselors, and mental health workers often experience burnout after repeatedly being exposed to emotional distress and trauma. This emotional strain can hinder their ability to provide empathetic care consistently.

Moreover, human caregivers may be affected by emotional biases. For example, when faced with a particularly difficult or disturbing case, a crisis responder may struggle to offer the same level of empathy or objectivity they would in a more routine case. Unfortunately, this issue is compounded by shortages in healthcare professionals and mental health resources, leaving many individuals without the empathetic care they need. AI, in these cases, could serve as a valuable supplement, providing support to professionals who are at risk of emotional exhaustion.

AI as a Supplement, Not a Replacement

Despite AI’s potential to offer consistent and non-judgmental responses, there are clear limitations to its ability to replace human interaction in emotionally sensitive settings. Professor Michael Inzlicht, a co-author of the study, warns that AI should never fully replace human empathy. While AI might be useful for providing basic emotional validation or for offering support in less complex situations, it is not equipped to handle the nuanced emotional intelligence required for deeper connections.

One major concern is that over-reliance on AI could have negative consequences for individuals who might otherwise seek human interactions. For example, if a person begins to rely on an AI chatbot for empathetic conversation, they may miss out on the meaningful human connections that are necessary for long-term emotional well-being. Chatbots, while proficient at delivering surface-level empathy, lack the ability to form genuine relationships or address the underlying causes of mental health issues.

Another concern raised by Inzlicht and other experts is the potential for manipulation. AI systems are developed by tech companies, and this raises ethical questions about the role these companies might play in shaping users’ emotional lives. For example, a person who is feeling lonely or isolated might come to depend on the constant flow of empathetic responses from an AI chatbot, but this could exacerbate feelings of social isolation in the long term, as the person may avoid meaningful human interaction in favor of an artificial connection.

The Dangers of AI Aversion and Overreliance

Despite the initial positive reception of AI-generated empathetic responses in the study, there is a significant issue known as AI aversion—the reluctance or skepticism many people have towards trusting AI to understand and engage with human emotions authentically. In the study, participants’ ratings of AI-generated responses were high, but once they knew the response came from a chatbot, their perception changed, and they rated the responses lower. This bias against AI could be problematic, especially when trying to integrate AI tools into sensitive fields such as mental health care.

Interestingly, this skepticism may lessen with time. Younger generations, who have grown up interacting with AI-powered devices and platforms, are more likely to trust AI responses and accept them as authentic. This trend indicates that while initial reactions to AI-generated empathy may be negative, they may change as familiarity with AI grows.

The Path Forward: A Balanced Approach

While AI has clear potential to enhance empathetic interactions, experts like Inzlicht stress that it should be used as a supplement rather than a replacement for human care. AI could be a helpful tool for alleviating some of the emotional strain experienced by caregivers, offering timely and consistent support where human resources are limited. However, the human touch—the deep, authentic empathy that comes from real-life relationships—should never be sacrificed.

AI can fill gaps in caregiving where human resources are stretched thin, particularly in areas with mental health crises or where healthcare systems are overwhelmed. But for it to be used effectively, it must be done in a transparent and ethical manner. The goal should be to ensure that AI acts as a supportive tool, helping human responders provide more empathetic care, rather than taking over entirely.

Conclusion

In conclusion, while AI can generate empathetic responses with impressive consistency, it cannot replace the deep emotional connections that humans can offer each other. Empathy, as a human trait, is more than just a series of programmed responses—it is a complex and evolving emotional exchange that requires a level of human connection and understanding that AI will likely never fully replicate. Instead, AI’s role should be seen as one that enhances human empathy, enabling better care and support in contexts where it can fill critical gaps. Transparency in the use of AI and an awareness of its limitations are key to ensuring that AI serves to support, rather than replace, genuine human empathy.

Reference: Dariya Ovsyannikova et al, Third-party evaluators perceive AI as more compassionate than expert humans, Communications Psychology (2025). DOI: 10.1038/s44271-024-00182-6

Loved this? Help us spread the word and support independent science! Share now.